Real time face recognition python

Real time face recognition python : In the tutorial, we will explain the meaning of face recognition and real-time face recognition using opencv python programming. The face recognition is the simple work for humans and tends to effective recognition of the inner features i.e. eyes, nose, mouth, or outer features like head, face, hairline. Torsten and Wiesel David Hubel show that our brain has specialized nerve cells to unique local features of the scene, such as lines, edges angle, or movement.

In the face recognition process, algorithm is used in finding features that are present in an image.

- Face Detection: -

The face detection is considered as finding the faces in an image and extracts them to be used by the face detection algorithm.

- Face Recognition:-

The face recognition algorithm is used in finding features that are described in the image. The facial image is already extracted, cropped, resized, and usually converted into grayscale.

There are various algorithms of face detection and face recognition.

Face recognition using OpenCV:-

The image is cropped, cropped and converted in the grayscale.

This technique is to verify and identify a face from digitals images.

The human begins can recognize faces more effectively.

Then our brain will combine the different source of information into the usage pattern.

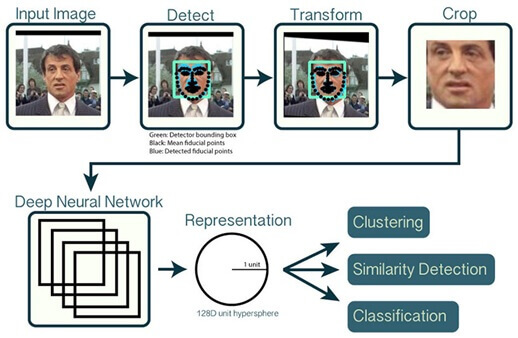

We do not see the visual as scatter if we define face recognition as "Automatic face recognition is all about to take out those meaningful features from an image and put them into a useful representation and perform classification on them".

The idea of face recognition will depend on the geometric features of a face.

It is also a feasible and intuitive approach for face recognition.

The automated face recognition system was described in the position of eyes, ears, and nose.

These positioning points are called features vector and achieved by calculating the Euclidean distance between vectors of a probe and reference image.

The files root directory:-

Encode_faces.py:-The encoding for faces that are built in a dataset.

Search_bing_api.py:-This is used to build a dataset and learn how to use the API to build a dataset with the script.

Recognize_faces_image.py:-

The face in a single image is based on encoding from the dataset.

Recognize_faces_video.py:-

It faces in the live video stream from your webcam and output video.

Recognize_faces_video_file.py:-

It will face the video file on the disk and output video to disk.

Encodings. Pickle:-

They are generated from dataset via the encode_faces.py and serialized it to disk.

Face Recognition Applications are:-

1) Customer service:-

The banks in Malaysia installed in which we use face recognition to detect customers of banks.

2) Facial authentication:-

The apple company has brought the face ID for the facial authentication iPhones and some banks use facial authentication lockers.

3) Insurance underwriting:-

They are using face recognition to match the face of a person with that provide photoID proof.

So the underwriting process will become faster.

OpenCV’s face recognition works:-

- To apply face detection that detects the presence and location of a face in an image but don’t identify it.

- To extract the 128-d feature vectors that quantify each face in an image

Face alignment the process of identifying the geometric structure of the faces and attempting to obtain a canonical alignment of the face based on translation, rotation, and scale.

Our face recognition dataset:-

Real time face recognition python

Example:-

The dataset which we use today contains three people,

- Myself

- Trisha (my wife)

- “Unknown”, which is used to represent the face of people which we do not know

- Each class will contain a total of six images.

Understanding the face recognition opencv source code :-

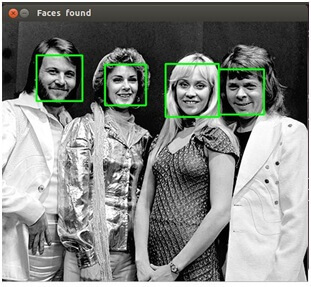

Face_detect.py script, the abba.png pic, and the haarcascade_frontalface_default.xml.

ImagePath=sys.argv [1]

CascPath=sys.argv [2]

firstpass image and cascade name as the command line argument.

faceCascade=cv2.CascadeClassifier (cascPath)

We create and initialize with face cascade then it load face cascade in memory and make it ready to use.

Image=cv2.imread (imagePath)

Gray=cv2.cvtColor (image, cv2.COLOR_BGR2GRAY)

The operations performed in opencv are as follows,

Faces=faceCascade.detectMultiScale (gray, scalefACTOR=1.1, MINnEIGHBORS=5, MINSize (30, 30), flags=cv2.cv.CV_HAAR_SCALE_IMAGE)

- The detectMultiScale function is a function that detects objects if we are calling it on the face cascade what it detects.

- First option is the grayscale image and second is the scaleFactor.

- Some of the faces may be closer to the camera they would appear bigger than the faces in the back.

- The scale factor compensates for this.

- The detection algorithm uses a moving window to detect the objects.

- MinNeighbors also define how many objects are detected near the current before it declares the face found.

- MinSize as meanwhile gives the size of each window.

print “Found {0} faces!”format (len (faces))

For(x, y, w, h) in faces:

Cv2.rectangele (image, (x, y), (x+w, y+h), (0, 255, 0), 2)

The function will return values as x and y of the rectangle and also width ‘w’ and height’h’.

The values used to draw rectangle is using build-in rectangle function (),

Cv2.imshow (“Faces found”, image)

Cv2.waitkey (0)

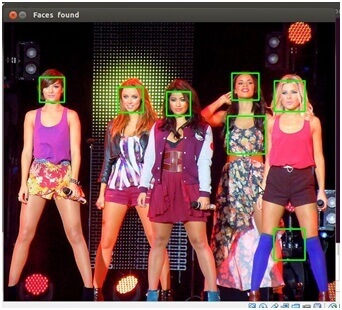

The image is as follows,

$python face_detect.py abba.png haarcascade_frontalface_default.xml

Then next photo:

It do not show face so I changed the parameters and found that setting the scaleFactor to 1.2 got rid of the wrong face.

- The first photo was taken close up with a high quality camera.

- Then second one was taken from afar and possibly with a mobile phone.

- So the scaleFactor had to be modified you have to set up the algorithm on a case-by case basis to avoid false positives.

- It based on machine learning the result will never be 100% accurate.

- You will get good results in most cases, but the algorithm will identify incorrect objects as faces.

- The final code is as follows

The face recognition system can operate basically in two modes:

Verification of a facial or Authentication image-

It compares the input facial image with the facial image related to the user, which is required authentication. It is a 1x1 comparison.

facial recognition or Identification:-

It compares the input facial images from a dataset to find the user that matches that input face. It is a 1xN comparison.

There are types of face recognition algorithms,

- Local Binary Patterns Histograms (LBPH) (1996)

- Fisherfaces (1997)

- Scale Invariant Feature Transform (SIFT) (1999)

- Speed Up Robust Features (SURF) (2006)

- Eigenfaces (1991)

Each algorithm will follow different approach to extract the image information and perform the matching with the input image.

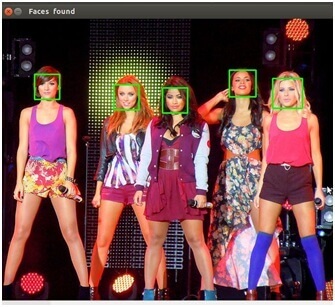

Introduction of LBPH:-

The meaning of LBPH is Local Binary Pattern Histogram algorithm is a simple approach that labels the pixels of the image thresholding the neighborhood of each pixel.

LBPH summarizes the local structure in an image by comparing each pixel with its neighbors and the result is converted into a binary number.

LBH was first defined in 1994 (LBP) and at that time it has been found to be a powerful algorithm for texture classification.

This algorithm is focused on extracting features from images.

The basic idea is not to look at the whole image as a high-dimension vector it only focus on the local features of an object.

In the above image, we take a pixel as center and threshold its neighbor against.

If the intensity of the center pixel is greater-equal to its neighbor, then it will denote with 1 and if not then denote it with 0.

Steps of the algorithm:

- Selecting the Parameters:-

The LBPH will accept the four parameters:

- Neighbors: The number of sample point is to build the circular binary pattern.

- Radius: It will represent the radius around central pixel and set to 1.

- Grid X: The number of cells is in the horizontal direction.

- Grid Y: The number of cells in the vertical direction.

It is used to build the circular local binary pattern.

- Real time face recognition opencv python:-

The system is capable of identifying/verifying a person from a video frame.

Then to recognize the face in a frame we need to detect whether the face is present in the frame.

If it is present then mark it as a region of interest (ROI), extract the ROI and process it for facial recognition.

Real time face recognition software:-

This project is divided into two parts as:

- Creating a database

- Training and testing.

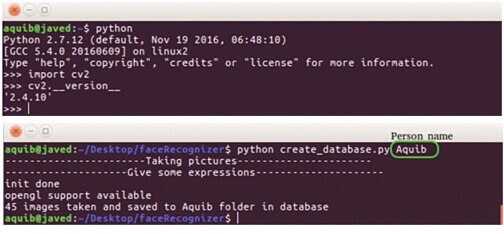

Creating a database:-

We have to take pictures of the person for face recognition after running create_database.py script.

Then it automatically creates train folder in database folder containing the face to be recognized.

Then you can change the name from Train to the person’s name.

While creating the database, the face images must have different expressions which are why a 0.38-second delay is given in the code for creating the data set.

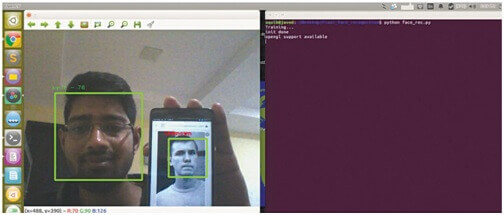

Training and testing:-

Training and face recognition is done by next. face_rec.py code does everything.

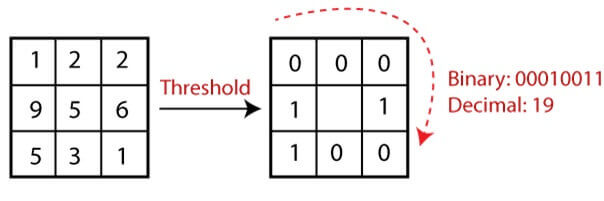

Fig. 1: Screenshot of Haar features:-

The face detection is a process of finding or locating one or more human faces in a frame or image.

Feature algorithm is by Viola and Jones is used for face detection.

Here all human faces share some common properties and these regularities may be matched using Haar features,

Two properties common to human faces are:-

- Nose bridge region is brighter than the eyes.

- The eye region is darker than the upper cheeks.

Compositions of two properties forming matchable facial features are:-

- Size and location include eyes, mouth, and bridge of the nose.

- The value for oriented gradients of pixel intensities.

For example, the difference in brightness between white and black rectangles is,

The value = Σ (pixels in black area) - Σ (pixels in white area)

The features matched by Haar algorithm are compared in the image of a face.

Testing procedure:-

Installation is Install OpenCV and Ubuntu on Python

Project was tested on Ubuntu 16.04 using OpenCV 2.4.10 and shell script installs all dependencies required for OpenCV and also installs OpenCV 2.4.10.

After installing OpenCV we check it in the terminal using import command as below,

- Create the database and run the recognizer script then Make at least two data sets in the database.

Run the recognizer script:

We start with the training and the camera will open up.

The accuracy depends on the number of data sets, quality and lighting conditions.

Fig. 4: Screenshot of face detection

The OpenCV provides the following three face recognizers:

- LBPH face recognizer

- Eigenface recognizer

- Fisherface recognizer